HDS HAM vs VPLEX Metro

Posted on December 4, 2012 by TheStorageChap in HDS, VPLEXWhilst there has not been a specific blog post lately there has been a lot of discussion on the Why IBM SVC and EMC VPLEX are not the same post that I originally published back in August, but until recently I have not really felt the need to tackle HDS HAM vs VPLEX Metro.

I am amazed at the traction we are getting with VPLEX and the Active/Active ‘bandwagon’ we seem to have created that all the vendors want a piece of. There are an increasing number of solutions that are claiming active/active, but you really have to understand the differences at the architecture level to fully appreciate what is really active/active.

HDS HAM vs VPLEX

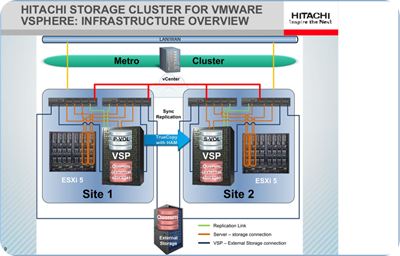

Recently HDS re-launched their High Availability Manager (HAM) solution, more specifically how HAM can be used in conjunction with vMSC (vSphere Metro Storage Cluster). This recent announcement has led to some confusion when comparing HDS HAM with VPLEX Metro for VMware use cases.

HAM is actually a collection of components, specifically:-

- Two (and only two) Hitachi VSP/USP-Vs

- High Availability Manager

- Hitachi Dynamic Link Manager (HDLM) multi-pathing software

- TrueCopy Synchronous Replication

- A FC Quorum in a third site, campus distance from the other two VSP/USP-Vs

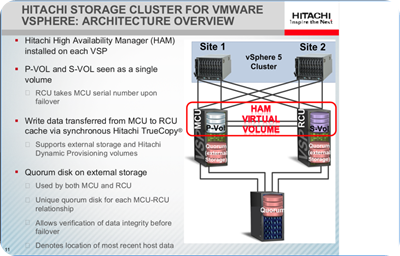

There are no real grey areas in relation to the question of active/active or active/passive; this is absolutely an active/passive solution. It uses active/passive array based replication between the VSP arrays and for the ESX host it‘s an active – hot standby deployment where HDLM automates path failover to the second site.

In a VMware environment the total list of required components, which you could argue add operational complexity, are:-

- HDLM suite on vCenter

- Multipathing (HDLM) driver on each ESX host

- Raid Manager on a remote management client or vCenter server

- Two configuration files are defined on the vCenter server. This file defines the copy pairs.

- To control the VSP replication from vCenter, two Command Devices (equivalent to Symmetrix Gatekeepers – one from each VSP) are needed as Raw Device Mappings

- The HAM Pairings are created on the VSP array layer with the Hitachi Storage Navigator GUI

I have several concerns about the HAM architecture.

- Quorum – It is not just that the FC LUN quorum must be within campus distances and accessible by the two VSP/USP-Vs. My BIG issue is that if the quorum goes offline or become inaccessible all replications stops. In a HDS HAM vs VPLEX Metro comparison the VPLEX Witness is a light-weight VM that requires only IP connectivity to the two primary sites and can be up to a 1 second RTT from each site, so effectively thousands of miles/KMs.

- WAN Failure – A failure of all WAN links will cause a complete site failover and production outage. The secondary S-Vols are r/w enabled and the primary volumes are disabled. According to the HDS documents, the ESX hosts have to be manually rebooted to get a HA restart in the secondary site. In a HDS HAM vs VPLEX Metro comparison in a VPLEX Metro configuration each volume continues on its preferred site and all VMs stay online. If no cross-connect is enabled and if the VMs run an the non-preferred site the VMware HA automatically restarts the VM with no manual intervention.

- Failback – So you have failed over with HAM and everything is now running from the S-Vol. You have now resolved your issues and want to failback. TrueCopy has to be reversed and enabled to replicate from site 2 back to site 1, every ESX host in Site 1 must be touched and CLI commands are issued in order to bring the local HAM paths back online, when the paths to the P-VOL are enabled, HAM will force another failover – back to Site 1, now again, the TrueCopy replication must be reversed and enabled. Again in a HDS HAM vs VPLEX Metro comparison, in a cross-connected VPLEX Metro configuration there is no concept of failover and failback. Everything is automatic.

With that in mind, the key take aways are:-

- VSP with vMSC only works in Uniform Host Access mode (i.e. requires cross connects) – See the whitepaper at http://www.emc.com/collateral/software/white-papers/h11065-vplex-with-vmware-ft-ha.pdf

- VSP with vMSC is an active/passive solution with only one side being writable at a time

- VSP with vMSC requires the use of third site Quorum disk that is used for data integrity upon site failover

- Loss of Quorum disk or access to Quorum disk breaks replication and prevents site failover

- Total Loss of WAN links between sites results in critical failure requiring manual restart/failover of VMs

- vMSC utilises HAM and TrueCopy, therefore vMSC is only supported with VSP

- Most of the HAM management is Command Line driven: check status, view pairings, failback, etc.

As always if any of these statements are incorrect, please comment so that they can be corrected.

Discussion · 4 Comments

There are 4 responses to "HDS HAM vs VPLEX Metro".Leave a Comment