HP 3PAR Peer Persistence vs EMC VPLEX Metro

Posted on November 14, 2013 by TheStorageChap in VPLEXI cannot believe that I have not had a blog entry since June! Things have been a little crazy of late both at work and at home, but today I find myself with 15 minutes to spare and wanted to talk about HP 3PAR Peer Persistence vs EMC VPLEX Metro.

Over the last 12 months the concept of the active/active datacenter has really gathered momentum and is appearing as a requirement in many more customer RFPs. This has inevitably led to more and more companies claiming active/active storage capability across sites and one of those is HP 3PAR’s Peer Persistence.

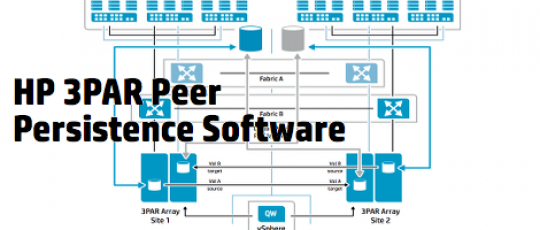

HP 3PAR Peer Persistence is coming up more and more in conversation and is another example of a ‘good enough solution’ at an appealing price point, that provides features that could appear to be similar to a VPLEX Metro solution. HP’s primary use case is in conjunction with VMware, where they offer VMware HA and vMotion solutions, like we do with VPLEX Metro; they are also vMSC certified. Whilst they don’t really talk about support for things like Microsoft Windows Failover Cluster and Oracle RAC, I can see no reason why it would not work. It also has a VM based witness model like VPLEX to mitigate against ‘split brain’ concerns.

However there are some differences to make note of:-

- HP Peer Persistence only works between HP 3PAR arrays, VPLEX is truly heterogeneous. Admittedly this will be less of a concern for the majority of mid-market customers wanting to set up relationships between two HP 3PAR arrays or two VNX arrays etc. but it is worth understanding the potential for storage vendor lock-in.

- The Peer Persistence relationship is only between two HP arrays. The VPLEX relationship is between two sites with multiple arrays. However ditto above comment.

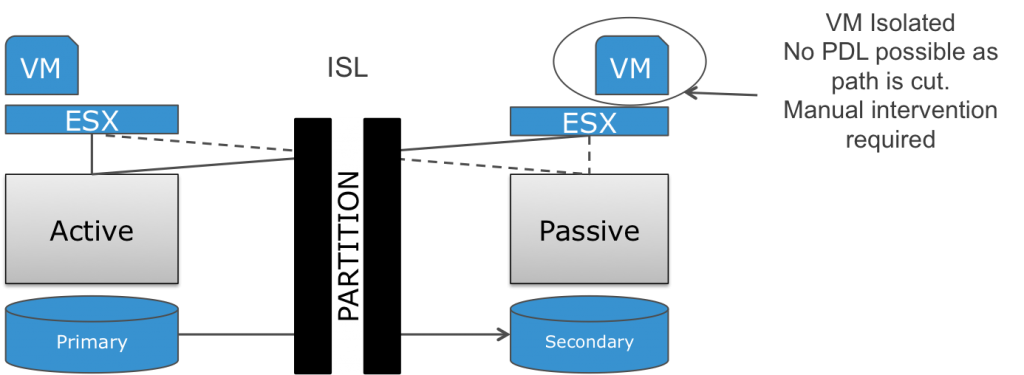

- Whilst HP specify ‘Load Balancing Across Sites’ it is important to understand that the architecture is Active/Passive at a LUN level, not truly Active/Active like VPLEX. This means that a single LUN is only served from a single storage array. In a VPLEX Metro environment the I/O is served from both arrays, enabling better asset utilisation. However, dependent upon their architectures, for many customers this point is a mute point. HP is no different to IBM SVC Split Node, NetApp MetroCluster or HDS HAM configurations in this regard. But understand that in this type of architecture, which relies on Uniform Access in VMware terms, that it is more likely that manual intervention will be required in the event of a WAN partition than if using VPLEX. Bottom line is that if you are buying the solution for continuous availability or fully automatic failover, this could be an issue as you will always have the fear of being in a situation that could require manual intervention.

- This also means that VPLEX will use significantly less bandwidth between sites as reads and writes do not go over the WAN multiple times.

- HP Peer Persistence only supports FC connectivity between sites. VPLEX also supports IP connectivity between sites, making site connectivity more flexible and cost effective.

- HP Peer Persistence requires fabrics to be stretched across sites (like SVC, NetApp etc.). VPLEX does not require that fabrics are stretched across sites.

- Each host must be connected to each storage array (ALUA) – With VPLEX, hosts only need to be connected to the nearest VPLEX this reduces the need for ISLs etc. between sites.

- The maximum RTT between sites supported by HP Peer Persistence is 2.6ms. VPLEX Metro supports 5ms enabling sites to be separated over greater distances (10ms for vMotion use cases)

- The maximum RTT for the Witness site connectivity with HP Peer Persistence is 150ms. VPLEX supports a 1 second RTT, providing additional flexibility in regard to the placement of the third site.

- Implementing VPLEX Metro, means that by default you also get the storage virtualisation capabilities offered by VPLEX Local (mobility, building blocks etc.). HP 3PAR does not enable heterogenous storage virtualisation.

The question is, can you afford to compromise?

As usual if I have got any of this wrong, please feel free to leave a comment and correct me!

Discussion · 8 Comments

There are 8 responses to "HP 3PAR Peer Persistence vs EMC VPLEX Metro".Leave a Comment